Exploring Large Language Models Capabilities to Explain Decision Trees

Hybrid Human AI Systems for the Social Good (HHAI)

Paulo Bruno de Sousa Serafim1

Pierluigi Crescenzi1

Gizem Gezici2

Eleonora Cappuccio3,4

Salvatore Rinzivillo4

Fosca Giannotti2

1Gran Sasso Science Institute

2Scuola Normale Superiore di Pisa

3Università di Pisa, Università di Bari Aldo Moro

4Istituto di Scienza e Tecnologie dell’Informazione, CNR

Paper: [PDF]

Abstract

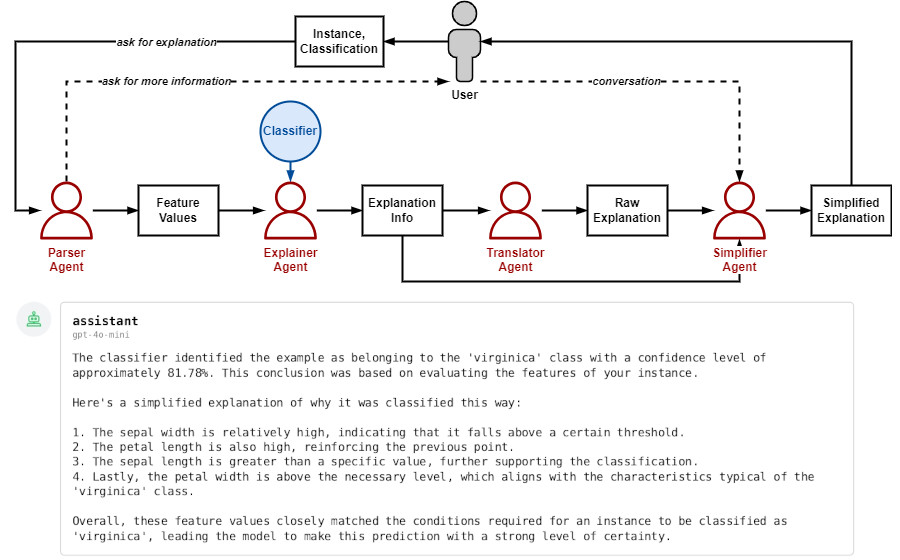

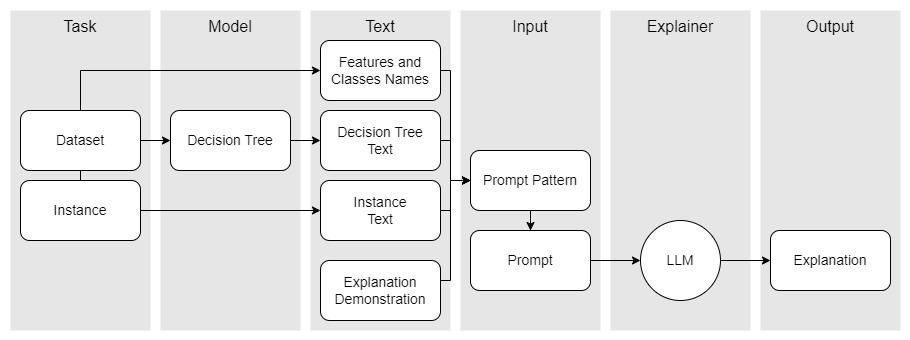

Decision trees are widely adopted in Machine Learning tasks due to their operation simplicity and interpretability aspects. However, following the decision process path taken by trees can be difficult in a complex scenario or in a case where a user has no familiarity with them. Prior research showed that converting outcomes to natural language is an accessible way to facilitate understanding for non-expert users in several tasks. More recently, there has been a growing effort to use Large Language Models (LLMs) as a tool for providing natural language texts. In this paper, we examine the proficiency of LLMs to explain decision tree predictions in simple terms through the generation of natural language explanations. By exploring different textual representations and prompt engineering strategies, we identify capabilities that strengthen LLMs as a competent explainer as well as highlight potential challenges and limitations, opening further research possibilities on natural language explanations for decision trees.

BibTeX

@inproceedings{serafim2024exploring,

title = {Exploring Large Language Models Capabilities to Explain Decision Trees},

author = {Serafim, Paulo Bruno Sousa and

Crescenzi, Pierluigi and

Gezici, Gizem and

Cappuccio, Eleonora and

Rinzivillo, Salvatore and

Giannotti, Fosca},

booktitle = {Journal of Open Source Software},

year = {2024},

series = {Frontiers in Artificial Intelligence and Applications},

editor = {Lorig, Fabian and

Tucker, Jason and

Lindstr{\"o}m,, Adam Dahlgren and

Dignum, Frank and

Murukannaiah, Pradeep and

Theodorou, Andreas and

Yolum, Pinar},

volume = {386},

pages = {64--72},

doi = {10.3233/FAIA240183}

}